Behind the Scenes - Escape Room

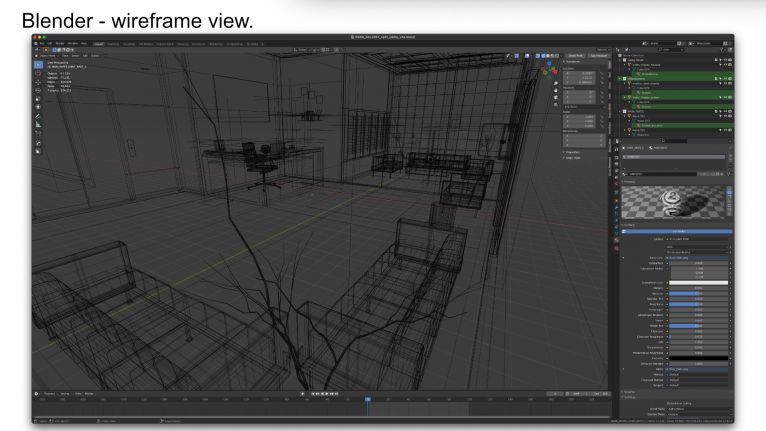

The Escape Room is an interactive short created using Blender 2.93 and Verge3D 3.8. The following is a Behind the Scenes look at the making of the Escape Room.

The Escape Room was developed by Route 66 Digital as an internal R&D project and was released to the public so they could join in the fun. When we stared this project we had a few specific items we were trying to learn more about: memory management, redraws, lighting, and light probes.

For us, memory management is always one of the biggest concerns. It affects which devices can run the application, the frames per second (FPS) and the load times. Up to this project, most of the memory consumption was based on how we made and textured models. My background in video animation made for some very large models and textures, not suited for online use. I was making detailed models with hundreds of parts per model with an equally large number of independent textures or buying models online and using those without optimization. For this and all future projects, I knew I needed a new process. For the room, I ditched the idea of modeling in NURBS and used the native polygon modeling in Blender. I spent time trying to create high-resolution models with my usual detail and had planned to bake those textures and normals down to a lower poly. But the room is mostly comprised of simple cubes so I skipped this step and created only low poly models adding in beveling and subdivisions surfaces where I needed to get the details for the maps. The other reason I skipped this process was the normal maps. Given the quantity I needed and their large in size, I opted to not use normal maps for this project.

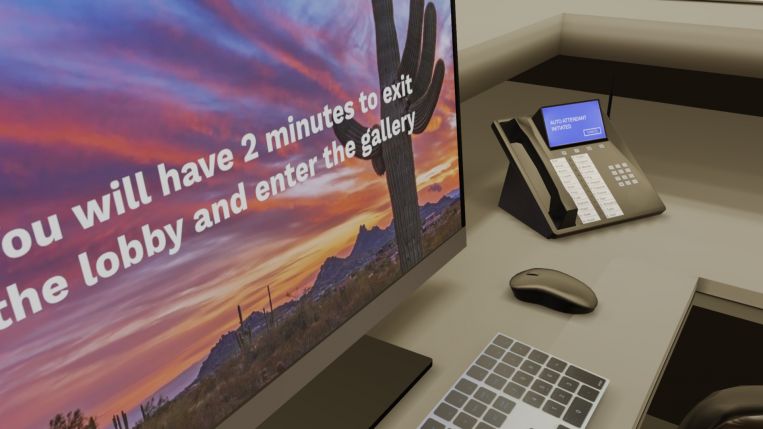

I also spent some time just deleting objects that had high polygon counts that did not contribute to the game experience. The phone is a prefect example. In my original build I had a phone chord. The chord itself consisted of over 100,000 triangles. Since it was not a key element, I deleted it and changed the phone to cordless. I did the same for the mouse and the monitor, just deleting cables to reduce the triangle count.

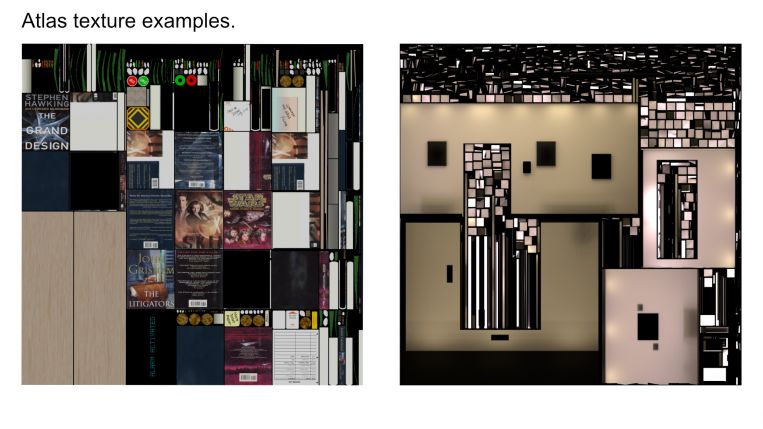

After completing the initial build, I had roughly 1.4 million triangles and approximately 1200 unique objects, 86 lights, and roughly 700Mb in textures. Next came the process of baking the atlas maps. This process took the longest. Initial testing and noise levels in the maps required that I bake the textures at 8K at 10,000 samples using Cycles. Over the next three months I tested many different baking plugins and found that at least for me, the best results were just setting them up by hand. There are several youtube videos about how to bake multiple textures to a map. What they never seem to tell you is the very important secret - Noise. More importantly, they don't mention how to overcome it. I thought Blender would have just done it during the rendering of the bake but it does not. The good news and the secret to removing the noise are built-in Blender. Blender's compositor has a denoiser that works quite well as long as the textures being denoised have their smallest detail 10x larger than the noise, otherwise the denoiser will effectively erase the small details. This was especially apparent with text. To avoid losing the text, I ended texturing those items independently with their own individual maps and saved as PNGs. JPGs can be used but when you need detail and color accuracy the PNGs are preferred and for items that are less important, I used JPEGs to save on overhead.

So after three months the maps were completed. I spent another week reducing and optimizing the scene geometry and applied the baked maps. I had captured all the lighting and shadows in the baked textures so I was able to delete all the lights. The final scene has 72,331 vertices and 134,253 triangles, 134 objects, and 0 lights. Zero lights were key to lowering redraws which in turn affects the performance of the application. As an added bonus the quality of rendered scene looked more in line with a video than had we used physical lighting. So that's a win-win.

With three of the four goals completed, it was time to move on to light probes. The glass walls in the middle of the room reflected the HDR background and we needed it to reflect the room. There were other objects in the room that needed reflection attention as well. I started by placing small reflection cubes throughout the scene but quickly realized the performance price. The redraw count went through the roof and playback suffered. Although not perfect, I settled for one large reflection cube. In the next release I will probably add one for the floor.

At this point the research was complete and the project was ready to be backed up and stored for reference but I had another idea, the Escape Room. Once the project was exported to a GLTF the focus became all about puzzles and javascript.

The game interaction uses standard Verge3D puzzles, on drag, on rotate, on click, etc. We did notice that for drag events... it's better to have the camera off-angle than straight on, otherwise the rotation of the combination lock would rotate in unpredictable directions making it impossible to enter the combination. I utilized raycasting to navigate, along with a constraint material to keep the camera in the bounds of the room. Since this was going to be available on mobile phones, I set up different tween targets for mobile and desktop. After a few days the puzzles were complete or so I thought.

First round QA and internal testing sent this project back to the beginning a few times re-baking a few textures to optimize color compression and to fix modeling errors that were showing up as rendering oddities. During this time my prototype menu system was being remade by our programming team. They created some great JavaScript that allowed me to implement a preloader with video and a menu system. Game inventory items, hints, camera rotation controls, and sound control were added. After about a month of testing and refining we then moved to the preloader.

With the release of iOS 15 and an update to Safari methods we had previously used to play video prior to entering into an experience needed an update. It was decided to encapsulate the intro video into the preloader and use HiDPI buttons to allow the user to start the experience or skip the intro and jump right to the game.

We had a lot of fun figuring out the game. I want to thank everyone at Route 66 Digital for letting me get this created but also to Soft8Soft and their great support. A special thank you to all the users on the forums that had great insights to get me past a few hurdles.

We hope you enjoy the game.

- Play it here: https://webgl.r66dapps.com/escapev1/

- Trailer: https://youtu.be/EjB3nnkUSgw

- Other project examples using Verge3D: https://www.r66d.com/web-gl